Artificial Intelligence (AI) is transforming how organizations operate—from automating business processes to enhancing customer experiences. As AI adoption accelerates, so do concerns around data privacy, model integrity, and regulatory compliance.

At Olive + Goose, we believe that innovation should never come at the cost of trust. That’s why secure and responsible AI deployment is increasingly important. Organizations must shift from reactive security to proactive governance, embedding safeguards into every layer of the AI lifecycle.

This blog outlines a practical framework for deploying AI securely within Microsoft 365 and Azure environments, balancing innovation with governance and helping organizations reduce risk while building trust.

Why Secure AI Deployment Matters

AI systems are only as secure as the data, infrastructure, and policies that support them. Without the right controls, AI can:

- Expose sensitive data

- Amplify bias or misinformation

- Be exploited through adversarial attacks

- Violate compliance obligations (e.g., GDPR, HIPAA)

Additionally, public trust in AI is increasingly tied to how transparently and securely it is developed and deployed. Organizations that fail to consider ethical and legal risks may find themselves facing reputational damage, regulatory fines, or both.

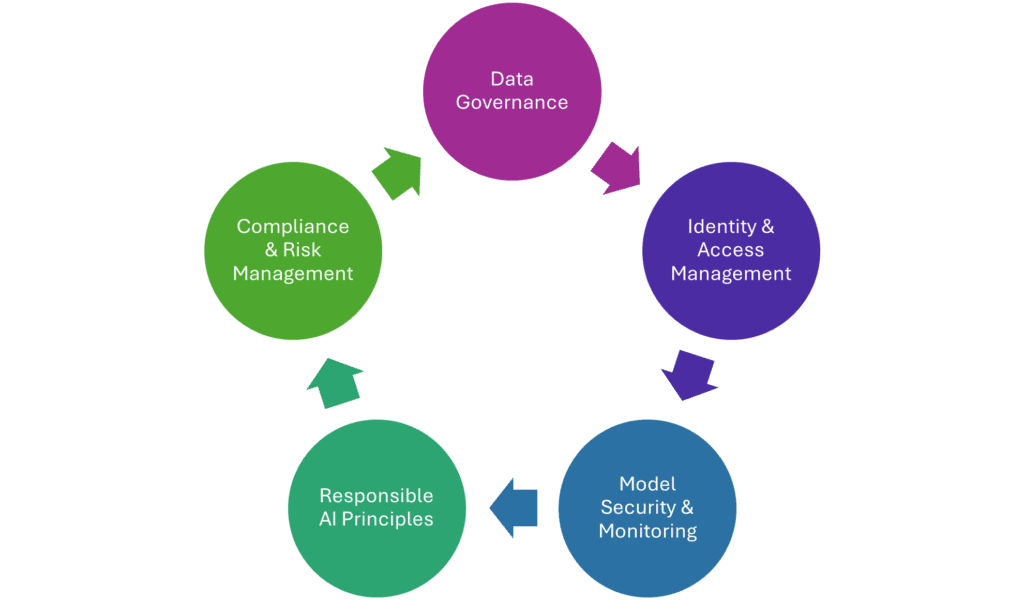

The Secure AI Deployment Framework

To responsibly scale AI within your organization, you need more than just models and data—you need a secure, structured foundation. At Olive + Goose, we align AI deployments with Microsoft’s trusted cloud technologies and responsible AI standards. Here are the five key pillars that define a secure and ethical AI framework:

1 – Data Governance

AI begins with data—and protecting that data is foundational.

Use tools like Microsoft Purview to:

- Classify sensitive data (e.g., PII, financials, health records)

- Apply encryption and granular access controls

- Monitor data lineage and usage across systems

Also ensure that training data is:

- Representative and inclusive

- Anonymized where required

- Regularly audited for bias and quality

Strong data governance ensures AI models are not only high-performing but also compliant and ethically grounded.

2. Access Control and Identity Management

Restrict access to sensitive assets using Microsoft’s built-in identity solutions:

- Role-Based Access Control (RBAC) to manage permissions

- Conditional Access for real-time access policies

- Microsoft Entra ID (formerly Azure AD) for identity federation and SSO

All access should be logged and audited to ensure accountability and support incident response.

In a Zero Trust environment, identity becomes the new perimeter. Proper access management prevents insider threats and limits the blast radius of potential breaches. Learn more: Microsoft Entra.

3. Model Security and Monitoring

AI models must be safeguarded against tampering, theft, and misuse.

Use Azure Machine Learning to:

- Manage model lifecycles

- Track performance, drift, and bias over time

- Apply version control and rollback policies

For advanced threat protection, Microsoft Defender for Cloud can detect anomalies across your AI workloads and alert you to suspicious activity.

Security must extend beyond the code to include AI-specific risks—like prompt injection, adversarial inputs, and data poisoning. Monitoring and retraining must be continuous, not occasional.

4. Responsible AI Principles

Beyond security, organizations must consider the ethical implications of AI.

One suggestion to accomplish this is to adopt Microsoft’s Responsible AI principles, which emphasize:

- Fairness – Prevent discriminatory outcomes

- Transparency – Explain how decisions are made

- Accountability – Assign ownership for AI systems

- Privacy – Respect user data and consent

Use tools like InterpretML and Fairlearn to operationalize these values in your AI workflows.

5. Compliance and Risk Management

AI systems must align with a growing landscape of compliance standards, including:

- GDPR, HIPAA, CCPA

- ISO/IEC 27001

- NIST AI Risk Management Framework

- Industry-specific regulations (e.g., financial services, public sector, healthcare)

You can leverage Microsoft Compliance Manager to assess and maintain your compliance posture across cloud environments.

In addition, proactive compliance is key to sustainable AI deployment. A well-structured governance model not only mitigates regulatory risk—it enables innovation at scale by removing bottlenecks.

Final Thoughts: How Olive + Goose Helps

AI can be a force multiplier, but only when deployed securely and responsibly.

At Olive + Goose, we partner with organizations to innovate with confidence. Our services are designed to embed trust at the heart of your AI journey:

- AI readiness assessments with a focus on security and compliance

- Microsoft Purview implementation for data classification and governance

- Entra ID and Conditional Access for secure identity and access management

- Responsible AI strategy design and policy development

- Deployment support for Microsoft 365 Copilot and Azure OpenAI solutions

We don’t just help deploy AI—we help organizations operationalize AI governance, security, and trust across their Microsoft cloud landscape.

Disclaimer: AI-assisted, Olive + Goose approved.

References: